A Rube Goldberg Machine for Container Workflows

Part of Docker Inc’s grand vision for the container ecosystem was the primacy of Docker Hub for hosting and distributing container images. As the container ecosystem has evolved, Docker Hub has become less relevant.

GitHub Actions (GHA) has won over developers. We are at the point where GHA has become the CI/CD platform of choice for projects that use GitHub to host their code. GitHub provides private package hosting for javascript, ruby, java, .net and container images, with Python and PHP slated for some time in 2022. Over time GitHub are likely become the go to place for offical docker images from open source projects.

While it is possible to pull down your container images from GitHub Container Registry (GHCR), there are often benefits or even a requirement to host the images near where they will run. All of the large cloud providers offer their own container registry. In the case of AWS, this is the Amazon Elastic Container Registry or ECR.

The common pattern is to build a docker image using a GitHub Action. If all the tests pass, this image is then pushed to ECR. The problem with this pattern is long lived credentials are used by the Actions. While in theory you can rotate the credentials regularly, in practice this rarely happens. Often a global set of secrets are used, which means any user who can write to the Action yaml file can grab these credentials.

If the credentials leak, you will have trouble controlling their use. GitHub’s hosted Action runners can execute using any one of 8.3 million + IPv4 addresses or 66 septillion (66 - 10 ^ 24) IPv6 addresses. Even if Amazon allowed IP restrictions to such a large range, what’s the point? Any leaked creds can be used on a lot of Azure boxes.

Another common pattern is to use AWS CodePipeline to rebuild the image on AWS. The problem with this approach is that you’re rebuilding your artefact. Software today has many dependencies, any of these could have changed in the 15 minutes between the two builds running. Which of these could break your build? If you’re doing this the right way, you should be promoting build artefacts, not rebuilding them.

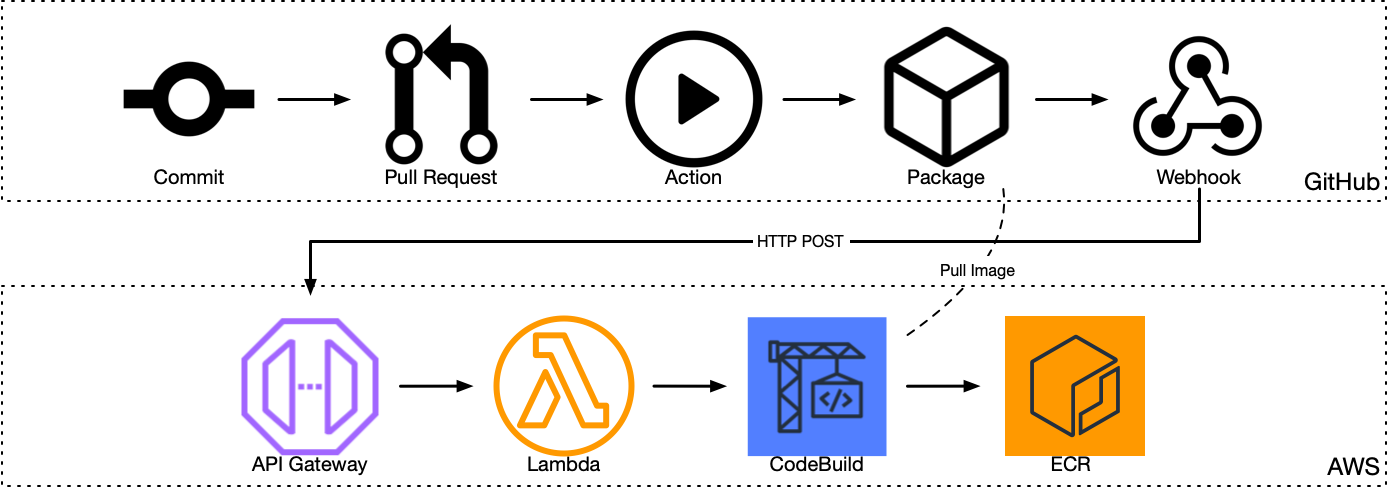

There is a better way. It might sound like a Rube Goldberg machine at first, but bear with me. It simplifies the security controls and reduces the credentials spread across platforms.

GitHub fires events via webhooks when state changes. One class of events is changes in package repositories. We can use these events to track when a container image is created or updated.

We can create a simple API Gateway v2 HTTP API to receive these events. Lambda can then process the webhook data. Unfortunately Lambda doesn’t allow you to run Docker within a Lambda function. We can still use the Lambda function for validating the payload before passing kicking off a build in CodeBuild.

While CodeBuild is one of the more expensive compute services on AWS, it is the only serverless option that allows us to run the docker cli. This still means it’s cheaper than running and maintaining an EC2 instance just to move docker images around.

The build script uses the GitHub API to get a list of tags on the docker image, then does the same with ECR. Then it pulls the missing images from GHCR before pushing them to ECR.

While there are quite a few moving pieces in this process, the only credentials used in the whole flow is the pressured key used for authenticating the GitHub webhook payloads.

Here is the full flow from git commit to the image landing in ECR:

If you’ve gotten this far and you’re thinking “this is pretty cool, I’d like to use it for my projects”, then checkout the ghcr2ecr project on GitHub. It is a complete terraform module for building this out. If you have feedback, please open an issue.